Unit-testing Streamlit Applications

Overview of Streamlit

Streamlit is a Python framework for putting together quick data visualisation dashboards. It is easy to use and doesn’t require you to touch any HTML/CSS unless you want to (though if you do, it doesn’t make it easy). Here is an example so you can get a feel for it.

import streamlit as st

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

st.header("My example app")

data = pd.DataFrame({"stonks": (1 + np.random.normal(size=50) / 10).cumprod()})

st.dataframe(data.T)

if st.button("Draw Plot"):

fig, ax = plt.subplots()

ax.plot(data["stonks"])

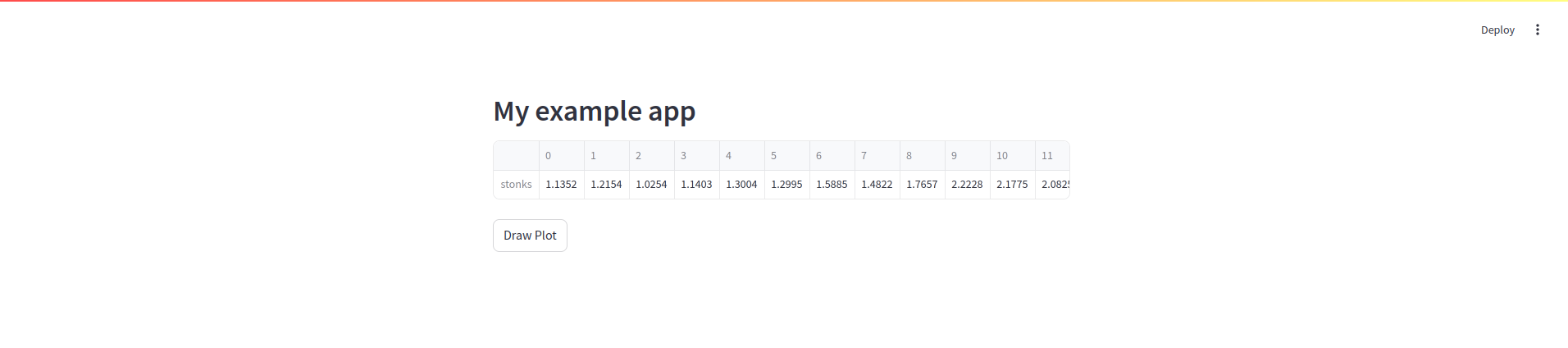

st.pyplot(fig)When you run the app with streamlit run app.py, you see this:

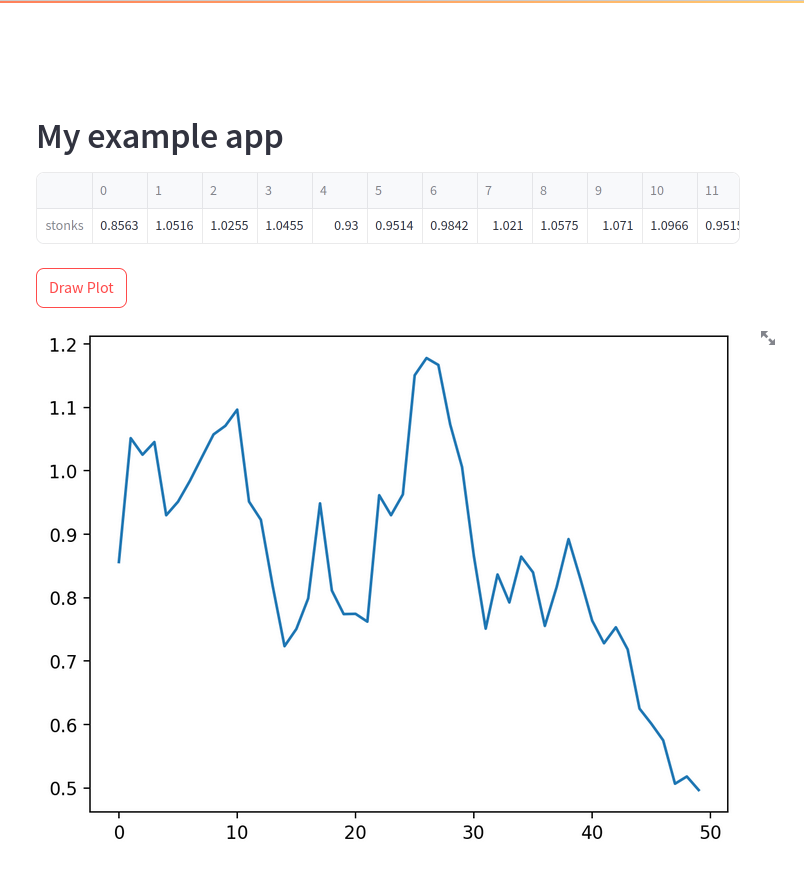

After pressing the button, a nice matplotlib plot is displayed.

Built-in Support for Testing

In my work, I recently needed to build a fairly critical piece of infrastructure using Streamlit. So, we needed automated testing. Luckily streamlit provides a whole testing framework. Just import st.testing.v1.AppTest, follow the documentation and that should be it, right?

If it was this easy, you wouldn’t be reading this.

Let’s give this a go. First, we make our app a bit more civilized.

import streamlit as st

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

@st.cache_data

def load_data_from_external_resource():

raise ValueError("Could not connect to the database from the test environment!")

def main():

st.header("My example app")

data = pd.DataFrame({"stonks": (1 + np.random.normal(size=50) / 10).cumprod()})

st.dataframe(data.T)

if st.button("Draw Plot"):

fig, ax = plt.subplots()

ax.plot(data["stonks"], label="Portfolio")

benchmark = load_data_from_external_resource()

ax.plot(benchmark, label="Benchmark")

ax.legend()

st.pyplot(fig)

if __name__ == '__main__':

main()I’ve wrapped everything in a main function, and I’ve added load_data_from_external_resource(), a fake external dependency which we will need to mock. I have used Streamlit’s built-in caching mechanism carefully choosing @st.cache_data and @st.cache_resource.

We read the documentation for testing and come up with this simple behaviour test, implemented using pytest.

from unittest.mock import patch, MagicMock

import pandas as pd

from streamlit.testing.v1 import AppTest

from app import main

@patch("app.load_data_from_external_resource")

def test_flow(m_load_data):

m_load_data.return_value = pd.Series()

at = AppTest.from_function(main)

at.run()

at.buttons[0].click().run()Unfortunately, this fails with

test.py:15: in test_flow

at.button[0].click().run()

.venv/lib/python3.12/site-packages/streamlit/testing/v1/element_tree.py:228: in __getitem__

return self._list[idx]

E IndexError: list index out of range

So we don’t have a button to click? We also get this output in stdout:

2024-06-25 23:32:31.321 Uncaught app exception

Traceback (most recent call last):

File ".venv/lib/python3.12/site-packages/streamlit/runtime/scriptrunner/script_runner.py", line 589, in _run_script

exec(code, module.__dict__)

File "/tmp/tmpfak23eti/37448f24cdf8e012be03169487f8e460", line 17, in <module>

main(*__args, **__kwargs)

File "/tmp/tmpfak23eti/37448f24cdf8e012be03169487f8e460", line 2, in main

st.header("My example app")

^^

NameError: name 'st' is not defined

Here be dragons.

Tested Streamlit Functions are Strings

Looking into the source code we see this:

def from_function(

cls,

script: Callable[..., Any],

*,

default_timeout: float = 3,

args=None,

kwargs=None,

) -> AppTest:

source_lines, _ = inspect.getsourcelines(script)

source = textwrap.dedent("".join(source_lines))

module = source + f"\n{script.__name__}(*__args, **__kwargs)"

return cls._from_string(

module, default_timeout=default_timeout, args=args, kwargs=kwargs

)The function we pass gets turned into a string and then passed deeper into the framework. There, it gets written to a temporary file which is used by the AppTest.

The reason we are getting the NameError is that the import streamlit as st statement sits at the top of our app.py which is outside the function. In this new script file that Streamlit creates, the import is in fact missing. To be fair, we have been warned:

AppTest can be initialized by one of three class methods:

st.testing.v1.AppTest.from_file(recommended)st.testing.v1.AppTest.from_stringst.testing.v1.AppTest.from_function

Using from_file is not possible, unfortunately, since it breaks mocking. More on that later. Instead, I wrote this test runner function, which does the necessary import locally:

def app_runner():

from app import main

main()I then create AppTest like this: AppTest.from_function(app_runner). If we run our test_flow function again with pytest, the test passes.

AppTest does not fail on exception

We now understand that the import error was caused by streamlit copying the contents of the main function without including imports from the file in which it was declared. But remember that the ImportError only appeared in stdout. The unittest failed because we couldn’t find a button to click, which was only the second-order effect of the ImportError. For the sake of our sanity during debugging, and to prevent silent failures during testing, we would like the test to fail when the underlying app raises an exception.

When streamlit encounters an exception, it prints it to stdout, and also adds an element to the app, showing the exception to the user. To detect a failure, we need to either monitor stdout, or traverse at._tree looking for the right kind of element. The attribute starts with an underscore, which frightened me, so I went with the first option, sue me:

@pytest.fixture(scope="function")

def st_test(capsys):

yield

out, err = capsys.readouterr()

# Don't swallow stdout and stderr

print(out, end="")

print(err, file=sys.stderr, end="")

assert "Uncaught app exception" not in err, f"The app raised an exception. Captured stderr:\n{err}"

def test_flow(st_test):

...Running it again, the test fails with this output:

AssertionError: The app raised an exception. Captured stderr:

2024-07-08 20:06:00.996 Uncaught app exception

Traceback (most recent call last):

File ".venv/lib/python3.12/site-packages/streamlit/runtime/scriptrunner/script_runner.py", line 589, in _run_script

exec(code, module.__dict__)

File "/tmp/tmpxpwe7skj/986a5ec4f91b87238afba1934796bbc5", line 7, in <module>

method_under_test(*__args, **__kwargs)

File "/tmp/tmpxpwe7skj/986a5ec4f91b87238afba1934796bbc5", line 5, in method_under_test

main()

File "app.py", line 22, in main

benchmark = load_data_from_external_resource(data["stonks"])

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "app.py", line 9, in load_data_from_external_resource

raise ValueError("Could not connect to the database from the test environment!")

ValueError: Could not connect to the database from the test environment!

Much better! No more import errors, and no more silent test failures.

Mocking works as you would expect

We modify our test using unittest.mock.patch:

@patch("app.load_data_from_external_resource")

def test_flow(m_load_data: MagicMock, st_test):

m_load_data.return_value = pd.Series()

at = AppTest.from_function(method_under_test)

at.run()

at.button[0].click().run()

m_load_data.assert_called_once()The test now passes. All is well. As long as you’re using from_function, not from_file.

Why couldn’t we simply use from_file and avoid having a boilerplate app_runner function? When you use from_file, mocking just doesn’t work. Initially I assumed that this was because the AppRunner was instantiating a subprocess for some reason. I then hacked together framework where mocks would write call counts for different mocks into stderr, and the “parent process” would use pytest’s capsys fixture to parse those messages and make assertions… The irritation this caused me is what made me want to get this post out.

But the actual reason mocks didn’t work is because AppRunner will create a copy of the file passed as argument. So unittest.mock.patch was using the wrong import path. Now, from_function also creates a temporary file, but because the local import in the app_runner function uses the same path that you would naturally use as the argument to patch, mocking works just fine. A slightly nicer solution would be to extract the temporary file name from AppRunner and use from_file. But I bet there would be more underscores to be afraid off.

Streamlit cache does not get cleared between test methods

If we were to run the same test twice, it would fail:

FAILED test.py::test_flow_again_just_in_case - AssertionError: Expected '_db_call' to have been called once. Called 0 times.

The reason is straightforward: The streamlit cache does not get cleared between instantiations of AppTest. This is actually kind of justifiable: If you wanted to test an app that had multiple pages, or if you wanted to test the caching behaviour itself, an auto-clearing cache could cause trouble. We fix this by adding these two lines at the end of our st_test fixture:

@pytest.fixture(scope="function")

def st_test(capsys):

yield

...

st.cache_data.clear()

st.cache_resource.clear()Accessing streamlit components via key mostly works great

Say you have many buttons in your app don’t want to remember in which order they are created. Streamlit very reasonably offers you a way to specify a key when creating a widget, which we can use to select the right button in the test. To keep things organized, I put all my keys inside a StrEnum:

from enum import StrEnum

class UIKeys(StrEnum):

B_DRAW_PLOT = "b_draw_plot"

# ... other widget keys here

def main():

...

if st.button("Draw Plot", key=UIKeys.B_DRAW_PLOT):

...In the test, we can write

from app import UIKeys

at.buttons(key=UIKeys.B_DRAW_PLOT).click().run()So what’s the catch? Well, there are some streamlit components that have a key attribute in a constructor, e.g. st.dataframe, but if you try to retrieve one, this happens:

FAILED test.py::test_flow_again_just_in_case - TypeError: 'ElementList' object is not callable

That’s because a st.testing.v1.element_tree.Dataframe is only an Element, not a Widget, silly! So if you care about what your app is showing, and not only what it’s doing, you’re back to either remembering in which order things got called, or iterating over, say, at.dataframe and doing your test that way. As always, the least misleading documentation is the code itself.

And that’s it, you’re all set! Time for st.balloons()…

Takeaways

Should you do this?

At the end of this journey I know more about Streamlit internals than I ever wanted to. Usually when we add a new test to the suite, something new comes up. It feels like many of the problems I solved here should have, and could have, been solved in the test framework. If unit testing is required for you, I suggest you consider:

- Full web-based test using Selenium, with mocking at the endpoint level, not at the code level (i.e. treating the web app as a black box). This is a great solution if you have the resource to set it up.

- Using a different framework. Streamlit in my experience seems set up for small projects such as dashboards. If unit testing is a concern for you, it might be a sign that it’s time to migrate to a heavier framework like Dash.

Closing thoughts

Streamlit is a very useful prototyping tool, available for free. If the testing framework has its quirks, so be it – now you know how to work around them, if you have to.